Facebook has a big problem with fake accounts — and the company knows it. In just three months, Facebook might take down almost as many fake accounts as there are real ones on the site.

The social network today revealed its latest method of finding the fakes: a new machine learning model it calls Deep Entity Classification (DEC). Facebook says the system has already detected hundreds of millions of violating accounts.

DEC was designed to overcome the limitations of traditional methods of detecting fake accounts, which typically focus on the user profile. This can work pretty well when an account displays highly suspicious behavior, like posting hundreds of pictures of Rayban sunglasses. However, sophisticated attackers can fool these types of systems by subtly adapting their behavior, such as adjusting their number of friends and the time they keep the account active.

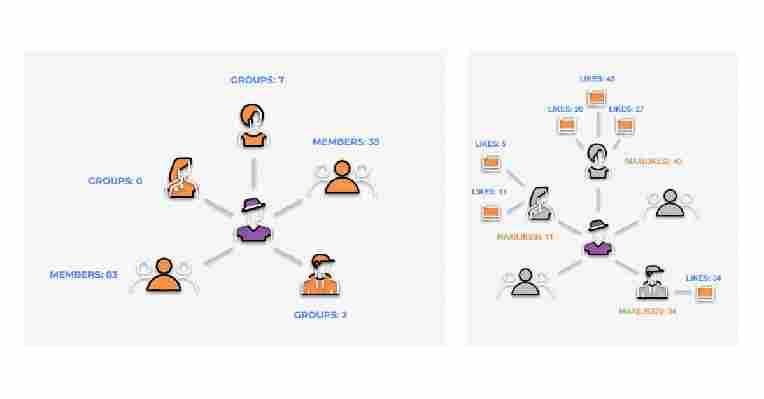

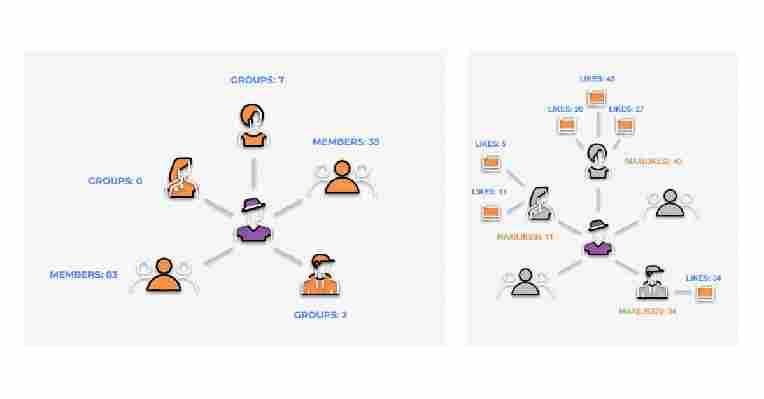

The new system addresses this by looking at deeper features. It finds these by connecting a suspect account to all the friends, groups and pages with which it interacts.

It will then investigate all of these connected entities. The friends will give off signals through their ages, the groups they join, and the number of friends that they have. Groups can be assessed based on the members they attract and the admins that they have. And pages can be evaluated by their number of admins.

By analyzing the behaviors and properties of all these entities, the model gets a strong idea about whether an account is fake.

But if it does get them wrong, the accounts enter an appeals process that gives the accused a chance to respond before they’re banned.

“This is defense-in-depth,” said Brad Shuttleworth, Facebook‘s Product Manager for Community Integrity. “It’s these two sets of systems working closely together and compensating for strengths of weaknesses of the other.”

Fake friends

Policing Facebook can be a tricky task. Human analysts alone can’t manage the enormous scale, while machines will struggle with the subtleties in behaviour of both fake accounts and real ones.

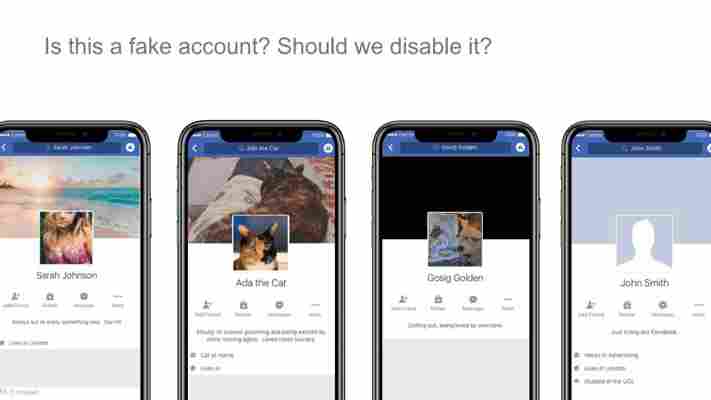

Not all of the accounts that look fake have malicious intentions.

“When people say fake, they often mean suspicious,” said Bochra Gharbaoui, a Data Science Manager in Facebook‘s Community Integrity unit.

“They’re not sure of what the intent of the account is, and it may also be that they’re seeing behavior on Facebook which doesn’t align with how they expect people to behave on the platform.”

These expectations can be a reflection of cultural differences. For example, in some parts of the world “friending” is only common with people you’ve met, while in other regions users often add friends that they’ve never met before.

To avoid discriminating against different cultures, Facebook’s Community Standards team only focuses on universal values.

Another issue is that fake accounts aren’t always malicious. They could just be businesses, organizations or “non-human entities,” like a pet cat, someone roleplaying Abraham Lincoln, or an alien, presumably, which have been misclassified as accounts instead of pages.

The violating accounts they want to disable are those that intend to cause harm. These are typically created by scammers and spammers trying to get your cash. Sometimes they find billions of them in a single quarter.

Facebook’s latest Community Standards Enforcement Report suggests that their approach is working. Over the two years it covers, over 99.5% of the violating accounts they took action against were found before users had reported them.

Still, it’s difficult to know how many fake accounts they might have missed. Facebook estimates that about 5% of monthly active accounts are fake, but admits that it can’t be sure about the numbers .

Nonetheless, Facebook is confident that its new system is working well, and is keen to assure critics that humans remain in the loop.

“This is the place where we see machine learning and human review working in concert forever,” said Shuttleworth.

The system also struggles to identify political motivations. Facebook has created dedicated teams to investigate those types of attackers, such as an Information Operations unit that focuses on nation-state actors. But the lessons from DEC could support their analysis.

They have a big test coming soon on how well this work is going: The US Presidential election is only nine months away.